In the previous blog I argued in favor of undefined behavior in C. The behaviors I was talking about, such as integer overflow, would be inefficient or counter-intuitive if they were defined. Nevertheless, it does mean that in their current form they cannot be diagnosed by the compiler. However, while the C standard lists almost 200 undefined behaviors, closer analysis shows that for many of them the compiler is quite capable of giving an error message, or a natural definition is perfectly possible.

Check out a couple of examples:

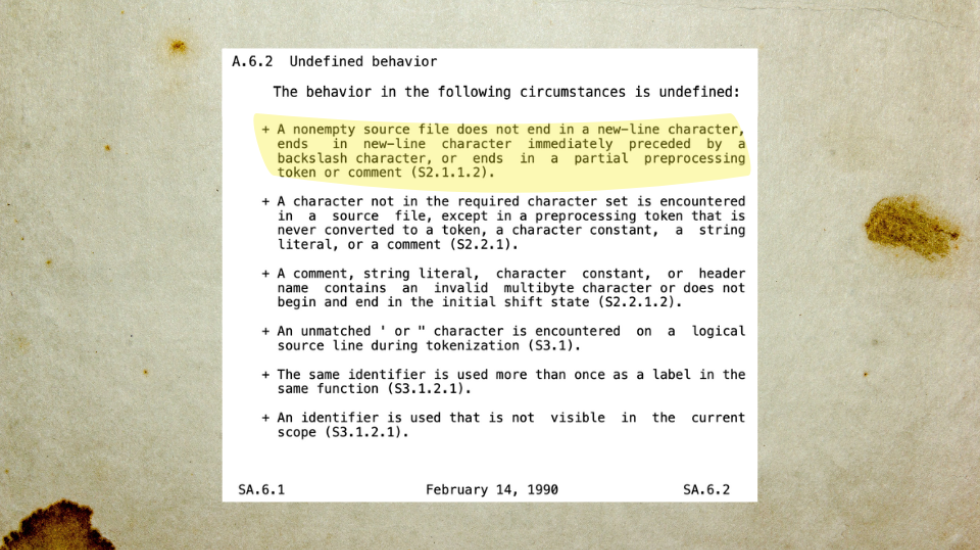

- If a non-empty source file does not end with a new line symbol, its behavior is undefined (C18:5.1.1.2). The historic reason for this is that on some platforms the operating system does not deliver to the compiler a final line that is not terminated by a new-line character, so the compiler cannot know its input is incomplete.

- If an inline function is declared as ‘extern’, but defined in another translation unit, the program’s behavior is undefined (C18:6.7.4).

If you use one of these ‘constructs’ in your program it may compile without diagnostics, and the behavior of the program will probably be exactly what you expect.

So what’s the issue?

Technically, your program’s behavior remains undefined. It only functions the way you expect it to because of a hidden, unspoken, and undocumented agreement between the compiler developer and your expectations. Is that what you want? Probably not.

It is also a problem because, as a creator of test suites, the team here at Solid Sands cannot write test cases for these constructs, because they do not have defined results. Maybe your response to that is something along the lines of ‘why not just generate test cases that verify the obvious behavior’, or ‘why should the test not expect the compiler to give an error’. But remember, we are testing your compiler not your program, so if we followed that line of thought your program would be relying on compiler properties that are not defined in the standard. That’s something you should probably not be comfortable with.

I would argue that the best solution is to remove these compile-time diagnosable, undefined behaviors from the C specification altogether and declare them ill-formed. You could counter-argue that doing so would introduce incompatible changes to the language. However, that is not true. No program that strictly conforms to the language specification would need changing, because by definition they would not contain undefined behavior.

The only argument I have heard against revising the C standard in this way is that it would obsolete existing compilers that implement expected, perfectly reasonable defined behaviors for these undefined constructs. However, I don’t believe that’s a solid argument in the 2020s. Firstly, most of these undefined constructs were documented as far back as the 1989 ANSI-C standard, so there are no surprises to be had. Secondly, show me a compiler that actually defines and documents the fact that it adds an implicit newline to a source file if it has none. Thirdly, if you have a large body of code that depends on a specific compiler’s behavior, then you could and should keep using it, because in truth that code is non-portable. It contains undefined constructs according to C standard versions going back many years.

I believe there is an opportunity here to make a significant step forward in the future of C, which despite its drawbacks has qualities unmatched by any other language. The processes required to build safety- and security-critical software deserve a definition of C without unnecessary loose ends.

I am sure many of you will have strong opinions, so why not enter the debate.

If you want to discuss the issues, please send us an email.

Dr. Marcel Beemster, CTO